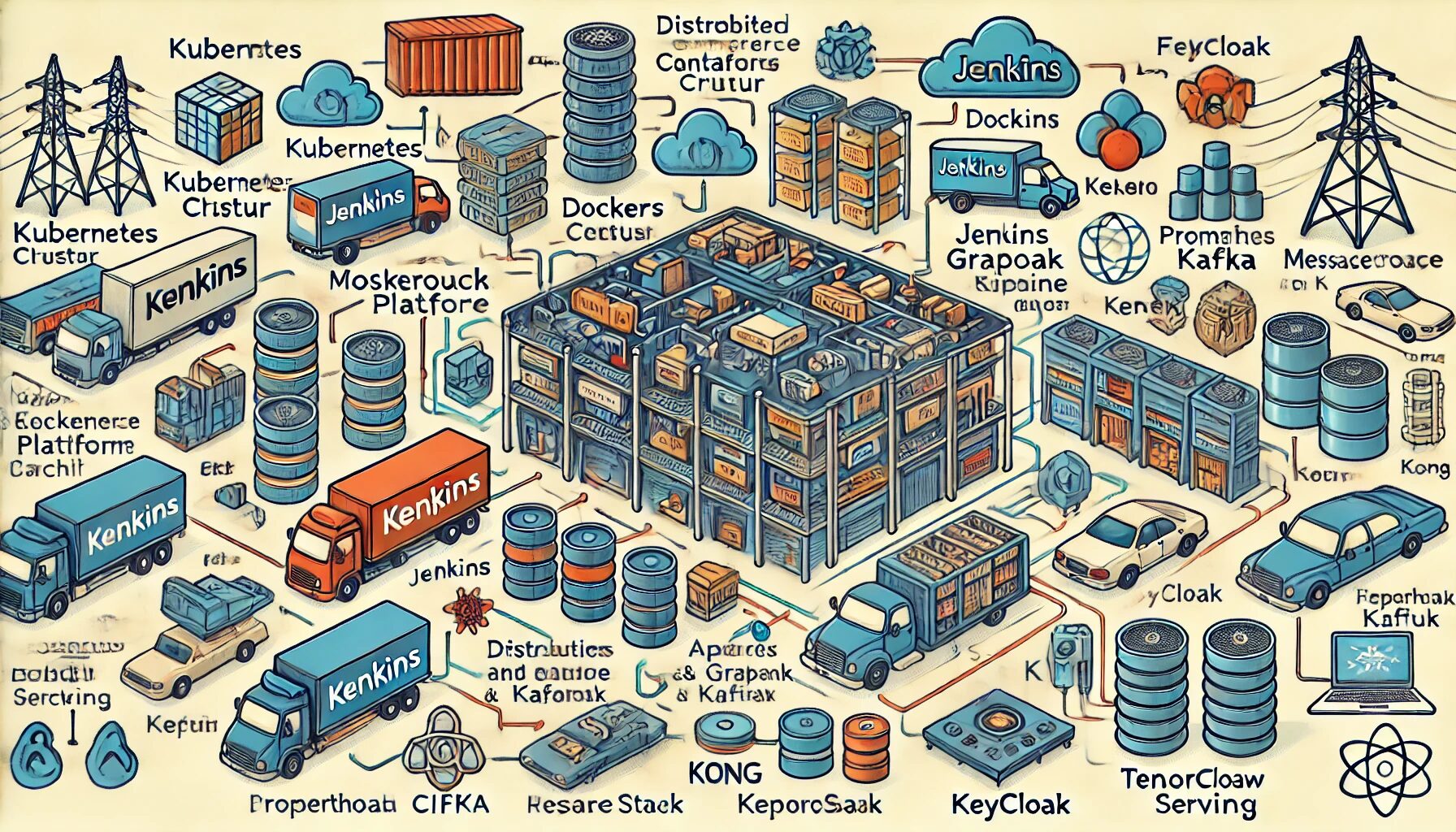

In this lesson, we’ll walk through deploying a highly advanced, secure, and scalable e-commerce platform using modern technologies and best practices. This tutorial assumes you are using a Debian-based system and have the necessary permissions to install and configure software.

Prerequisites

- Debian 10 or later

- Basic understanding of Linux, Docker, Kubernetes, and microservices

- A Kubernetes cluster (can be local or cloud-based)

- Basic knowledge of CI/CD tools like Jenkins or GitLab CI

1. Setting Up a Kubernetes Cluster

Installing Docker

First, we need to install Docker:

sudo apt update

sudo apt install -y apt-transport-https ca-certificates curl software-properties-common

curl -fsSL https://download.docker.com/linux/debian/gpg | sudo apt-key add -

sudo add-apt-repository "deb [arch=amd64] https://download.docker.com/linux/debian $(lsb_release -cs) stable"

sudo apt update

sudo apt install -y docker-ce

sudo systemctl enable docker

sudo systemctl start dockerInstalling Kubernetes Tools

Next, install kubeadm, kubelet, and kubectl:

sudo apt update

sudo apt install -y apt-transport-https ca-certificates curl

sudo curl -fsSL https://packages.cloud.google.com/apt/doc/apt-key.gpg | apt-key add -

sudo cat <<EOF >/etc/apt/sources.list.d/kubernetes.list

deb http://apt.kubernetes.io/ kubernetes-xenial main

EOF

sudo apt update

sudo apt install -y kubelet kubeadm kubectl

sudo apt-mark hold kubelet kubeadm kubectlSetting Up the Kubernetes Cluster

Initialize the Kubernetes cluster:

sudo kubeadm init --pod-network-cidr=192.168.0.0/16Set up the kubeconfig for the root user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/configInstall a network plugin (Calico):

kubectl apply -f https://docs.projectcalico.org/manifests/calico.yaml2. Deploying Microservices

Creating a Sample Microservice

We’ll create a simple Node.js microservice for user management.

Dockerfile

FROM node:14

WORKDIR /app

COPY package*.json ./

RUN npm install

COPY . .

EXPOSE 3000

CMD ["node", "index.js"]index.js

const express = require('express');

const app = express();

app.use(express.json());

app.get('/users', (req, res) => {

res.send([{ id: 1, name: 'John Doe' }]);

});

const PORT = process.env.PORT || 3000;

app.listen(PORT, () => {

console.log(`Server running on port ${PORT}`);

});Building and Pushing Docker Image

docker build -t your-dockerhub-username/user-service:latest .

docker push your-dockerhub-username/user-service:latestKubernetes Deployment and Service

apiVersion: apps/v1

kind: Deployment

metadata:

name: user-service

spec:

replicas: 3

selector:

matchLabels:

app: user-service

template:

metadata:

labels:

app: user-service

spec:

containers:

- name: user-service

image: your-dockerhub-username/user-service:latest

ports:

- containerPort: 3000

---

apiVersion: v1

kind: Service

metadata:

name: user-service

spec:

selector:

app: user-service

ports:

- protocol: TCP

port: 80

targetPort: 3000

type: LoadBalancerApply the deployment and service:

kubectl apply -f user-service-deployment.yaml

kubectl apply -f user-service-service.yaml3. Continuous Integration and Deployment (CI/CD)

Setting Up Jenkins

Install Jenkins:

wget -q -O - https://pkg.jenkins.io/debian/jenkins.io.key | sudo apt-key add -

sudo sh -c 'echo deb http://pkg.jenkins.io/debian-stable binary/ > /etc/apt/sources.list.d/jenkins.list'

sudo apt update

sudo apt install -y jenkins

sudo systemctl start jenkins

sudo systemctl enable jenkinsConfigure Jenkins (access via http://your-server-ip:8080):

- Install necessary plugins: Kubernetes, Docker Pipeline, Git, etc.

- Create a new pipeline job for your microservice.

Example Jenkins Pipeline

pipeline {

agent any

stages {

stage('Checkout') {

steps {

git 'https://github.com/your-repo/user-service.git'

}

}

stage('Build') {

steps {

script {

docker.build('your-dockerhub-username/user-service:latest')

}

}

}

stage('Push') {

steps {

script {

docker.withRegistry('https://index.docker.io/v1/', 'dockerhub-credentials-id') {

docker.image('your-dockerhub-username/user-service:latest').push()

}

}

}

}

stage('Deploy') {

steps {

script {

kubectl.apply("-f user-service-deployment.yaml")

kubectl.apply("-f user-service-service.yaml")

}

}

}

}

}4. Database Cluster

Installing CockroachDB

Download and install CockroachDB:

wget -qO- https://binaries.cockroachdb.com/cockroach-v20.2.9.linux-amd64.tgz | tar xvz

sudo cp -i cockroach-v20.2.9.linux-amd64/cockroach /usr/local/bin/Initializing CockroachDB Cluster

cockroach start --insecure --listen-addr=localhost

cockroach init --insecureConnecting to CockroachDB

cockroach sql --insecure --host=localhost5. Message Queues and Streaming

Installing Apache Kafka

Download and install Kafka:

wget https://downloads.apache.org/kafka/2.7.0/kafka_2.13-2.7.0.tgz

tar -xzf kafka_2.13-2.7.0.tgz

cd kafka_2.13-2.7.0Starting Kafka and Zookeeper

bin/zookeeper-server-start.sh config/zookeeper.properties

bin/kafka-server-start.sh config/server.propertiesCreating a Kafka Topic

bin/kafka-topics.sh --create --topic user-events --bootstrap-server localhost:9092 --replication-factor 1 --partitions 16. Logging and Monitoring

Setting Up ELK Stack

Installing Elasticsearch

wget -qO - https://artifacts.elastic.co/GPG-KEY-elasticsearch | sudo apt-key add -

sudo sh -c 'echo "deb https://artifacts.elastic.co/packages/7.x/apt stable main" > /etc/apt/sources.list.d/elastic-7.x.list'

sudo apt update

sudo apt install -y elasticsearch

sudo systemctl start elasticsearch

sudo systemctl enable elasticsearchInstalling Logstash

sudo apt install -y logstashConfiguring Logstash

Create a config file /etc/logstash/conf.d/logstash.conf:

input {

beats {

port => 5044

}

}

output {

elasticsearch {

hosts => ["localhost:9200"]

index => "logstash-%{+YYYY.MM.dd}"

}

}Start Logstash:

sudo systemctl start logstash

sudo systemctl enable logstashInstalling Kibana

sudo apt install -y kibana

sudo systemctl start kibana

sudo systemctl enable kibanaAccess Kibana via http://your-server-ip:5601.

Setting Up Prometheus and Grafana

Installing Prometheus

wget https://github.com/prometheus/prometheus/releases/download/v2.25.0/prometheus-2.25.0.linux-amd64.tar.gz

tar -xzf prometheus-2.25.0.linux-amd64.tar.gz

cd prometheus-2.25.0.linux-amd64

./prometheus --config.file=prometheus.ymlInstalling Grafana

sudo apt install -y apt-transport-https software-properties-common

sudo wget -q -O - https://packages.grafana.com/gpg.key | sudo apt-key add -

echo "deb https://packages.grafana.com/oss/deb stable main" | sudo tee /etc/apt/sources.list.d/grafana.list

sudo apt update

sudo apt install -y grafana

sudo systemctl start grafana-server

sudo systemctl enable grafana-serverAccess Grafana via http://your-server-ip:3000.

7. Security and Compliance

Setting Up Keycloak

Download and install Keycloak:

wget https://

github.com/keycloak/keycloak/releases/download/12.0.4/keycloak-12.0.4.tar.gz

tar -xzf keycloak-12.0.4.tar.gz

cd keycloak-12.0.4

bin/add-user-keycloak.sh -u admin -p password

bin/standalone.shAccess Keycloak via http://your-server-ip:8080/auth.

Configuring Network Policies

Create a network policy for Kubernetes:

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: allow-frontend

namespace: default

spec:

podSelector:

matchLabels:

role: frontend

policyTypes:

- Ingress

ingress:

- from:

- podSelector:

matchLabels:

role: backendApply the network policy:

kubectl apply -f network-policy.yamlSetting Up Let’s Encrypt for HTTPS

Install Certbot:

sudo apt update

sudo apt install -y certbot python3-certbot-nginxObtain a certificate:

sudo certbot --nginx -d your-domain.com -d www.your-domain.comSet up auto-renewal:

sudo systemctl enable certbot.timerConfiguring Fail2ban

Install Fail2ban:

sudo apt install -y fail2banConfigure Fail2ban for NGINX:

Create a new configuration file /etc/fail2ban/jail.local:

[nginx-http-auth]

enabled = true

port = http,https

filter = nginx-http-auth

logpath = /var/log/nginx/error.log

maxretry = 3Start and enable Fail2ban:

sudo systemctl start fail2ban

sudo systemctl enable fail2ban8. Backup and Disaster Recovery

Installing Velero

Install Velero CLI:

wget https://github.com/vmware-tanzu/velero/releases/download/v1.6.3/velero-v1.6.3-linux-amd64.tar.gz

tar -xzf velero-v1.6.3-linux-amd64.tar.gz

sudo mv velero-v1.6.3-linux-amd64/velero /usr/local/bin/Set up Velero with a storage provider (e.g., AWS S3):

velero install --provider aws --bucket your-bucket-name --secret-file ./credentials-velero --use-volume-snapshots=false --backup-location-config region=your-regionScheduling Backups

Create a backup schedule:

velero schedule create daily-backup --schedule="0 1 * * *"9. APIs and Web Frontend

Setting Up an API Gateway

Install Kong:

echo "deb http://kong.bintray.com/kong-deb stretch main" | sudo tee /etc/apt/sources.list.d/kong.list

sudo apt update

sudo apt install -y kongConfigure Kong with a database (PostgreSQL):

sudo kong migrations bootstrap

sudo kong startCreating a React Frontend

Create a new React application:

npx create-react-app frontend

cd frontend

npm startDeploy the frontend using NGINX:

sudo apt install -y nginx

sudo rm /etc/nginx/sites-enabled/default

sudo nano /etc/nginx/sites-available/frontendAdd the following configuration:

server {

listen 80;

server_name your-domain.com;

location / {

root /var/www/frontend/build;

try_files $uri /index.html;

}

listen [::]:443 ssl ipv6only=on;

listen 443 ssl;

ssl_certificate /etc/letsencrypt/live/your-domain.com/fullchain.pem;

ssl_certificate_key /etc/letsencrypt/live/your-domain.com/privkey.pem;

include /etc/letsencrypt/options-ssl-nginx.conf;

ssl_dhparam /etc/letsencrypt/ssl-dhparams.pem;

}

server {

if ($host = your-domain.com) {

return 301 https://$host$request_uri;

}

listen 80;

listen [::]:80;

server_name your-domain.com;

return 404;

}Enable the configuration and restart NGINX:

sudo ln -s /etc/nginx/sites-available/frontend /etc/nginx/sites-enabled/

sudo systemctl restart nginx10. Machine Learning Integration

Deploying ML Models with TensorFlow Serving

Install TensorFlow Serving:

echo "deb [arch=amd64] http://storage.googleapis.com/tensorflow-serving-apt stable tensorflow-model-server tensorflow-model-server-universal" | sudo tee /etc/apt/sources.list.d/tensorflow-serving.list

curl -s https://storage.googleapis.com/tensorflow-serving-apt/tensorflow-serving.release.pub.gpg | sudo apt-key add -

sudo apt update

sudo apt install -y tensorflow-model-serverServing a Model

Start TensorFlow Serving:

tensorflow_model_server --rest_api_port=8501 --model_name=my_model --model_base_path=/models/my_modelCreating Data Pipelines with Apache Airflow

Install Airflow:

sudo apt install -y python3-pip

pip3 install apache-airflow

airflow db initStart the Airflow web server:

airflow webserver --port 8080Access Airflow via http://your-server-ip:8080.

This concludes the lesson on deploying a highly advanced, secure, and scalable e-commerce platform on a Debian system. By following these steps, you will have a fully functional and robust setup that leverages the latest technologies in container orchestration, microservices, CI/CD, distributed databases, message queues, logging and monitoring, security, and machine learning.