Introduction

This lesson will guide you through the process of selecting and deploying an open-source ChatGPT-like conversational AI system on self-hosted infrastructure. By the end of this lesson, you will understand the criteria for choosing a suitable model, how to set up the necessary environment, and the steps to deploy and interact with the AI system.

Objectives

- Understand the key factors in selecting an open-source conversational AI model.

- Learn how to set up a self-hosted environment for deploying the model.

- Gain practical experience in deploying and testing the AI system.

Part 1: Selecting an Open Source Conversational AI Model

Criteria for Selection

- Model Performance:

- Accuracy and fluency in generating human-like responses.

- Ability to handle context and maintain coherent conversations.

- Model Size and Resources:

- Hardware requirements (CPU, GPU, RAM).

- Storage needs for model files and dependencies.

- Community and Support:

- Availability of documentation, forums, and community support.

- Frequency of updates and maintenance.

- Licensing and Use Case:

- Licensing terms and any usage restrictions.

- Suitability for your specific application or domain.

Recommended Models

- GPT-Neo and GPT-J by EleutherAI:

- High-performance models with large parameter sizes.

- Suitable for a variety of conversational tasks.

- BLOOM by BigScience:

- Multilingual support and open-access.

- Ideal for applications requiring multiple languages.

- DialoGPT by Microsoft:

- Fine-tuned for conversational response generation.

- Based on the GPT-2 architecture, offering a balance between performance and resource needs.

Part 2: Setting Up a Self-Hosted Environment

Prerequisites

- Hardware:

- A machine with sufficient CPU/GPU, RAM, and storage.

- Recommended: NVIDIA GPU for faster model inference.

- Software:

- Operating System: Linux (preferred for better compatibility).

- Docker: For containerized deployment.

- Python: Programming language for running scripts and managing dependencies.

Steps to Set Up

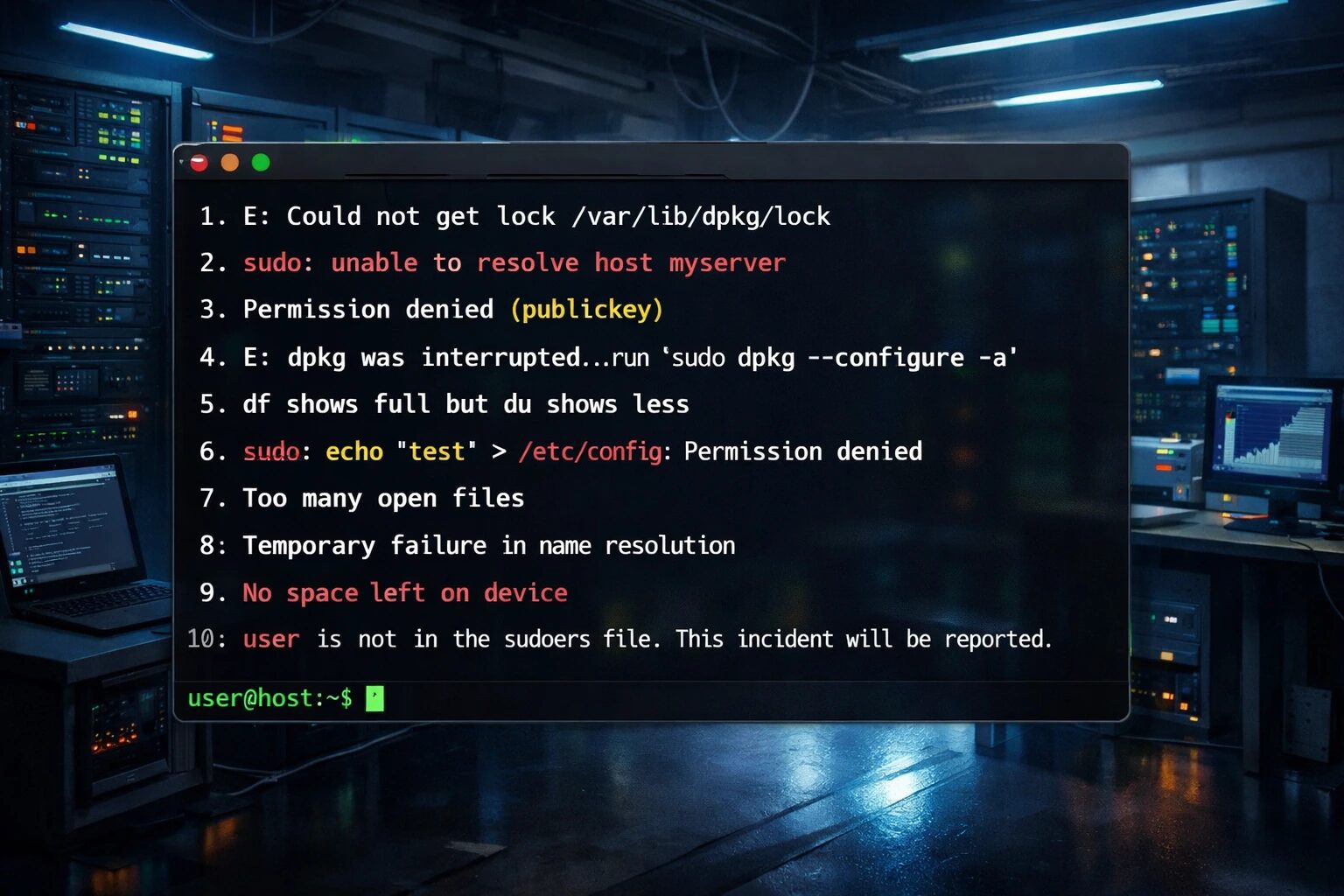

- Install Docker:

- Follow the official Docker installation guide for your operating system.

sudo apt-get update

sudo apt-get install -y docker-ce docker-ce-cli containerd.io- Pull the Docker Image:

- Find the official or community-supported Docker image for your selected model.

docker pull eleutherai/gpt-neo:latest- Set Up Python Environment:

- Install Python and create a virtual environment.

sudo apt-get install python3 python3-venv

python3 -m venv ai_env

source ai_env/bin/activate- Install Required Libraries:

- Use pip to install necessary libraries.

pip install torch transformersPart 3: Deploying and Interacting with the AI System

Deploying the Model

- Start the Docker Container:

- Run the Docker container with necessary configurations.

docker run -d --name gpt-neo -p 5000:5000 eleutherai/gpt-neo- Load the Model in Python:

- Write a Python script to load and interact with the model.

from transformers import GPTNeoForCausalLM, GPT2Tokenizer

model_name = "EleutherAI/gpt-neo-2.7B"

model = GPTNeoForCausalLM.from_pretrained(model_name)

tokenizer = GPT2Tokenizer.from_pretrained(model_name)

def generate_response(prompt):

inputs = tokenizer(prompt, return_tensors="pt")

outputs = model.generate(inputs["input_ids"], max_length=100)

return tokenizer.decode(outputs[0], skip_special_tokens=True)

prompt = "Hello, how can I assist you today?"

response = generate_response(prompt)

print(response)Testing and Iterating

- Test the Deployment:

- Interact with the AI system using various prompts to test its responses.

- Optimize and Fine-Tune:

- Fine-tune the model if needed using domain-specific data.

- Optimize the deployment for performance (e.g., using mixed precision or quantization techniques).

- Monitor and Maintain:

- Set up monitoring to track the system’s performance and usage.

- Regularly update the model and dependencies to incorporate improvements and fixes.

Conclusion

Deploying an open-source ChatGPT-like conversational AI system on self-hosted infrastructure involves careful selection of the model, setting up a suitable environment, and following systematic deployment steps. By understanding these processes, you can leverage powerful AI models to create interactive and intelligent conversational applications.